Different types of augmented reality

Contents

There are several and diverse types of augmented reality in the market. In this post we will define each of these types and provide detailed information about those that we cover within our Onirix platform. In this post we will focus on the types of AR that can be consumed from a cell phone, paying special attention to those that can be consumed from web environments (web AR).

The characteristics that allow us to classify these ways of using augmented reality are usually given by the way it is initiated (trigger) and by the tracking of the experience. Depending on this we can find a wide group of types of augmented reality, such as:

- Augmented reality on markers

- Augmented reality for images (image tracking)

- Location-based augmented reality (AR with GPS)

- Augmented reality on surfaces (also called World tracking or SLAM)

- Augmented reality on spaces (Spatial Tracking)

- Augmented reality on objects (Object tracking)

- Face tracking or augmented reality with filters on the face

- Body tracking or augmented reality on body parts

- Augmented reality in open spaces (World mapping)

Comparative table of types of augmented reality

Below is a comparison of the differences between the different types of RA:

Type | Trigger / tracker | Necessary to generate tracker beforehand | Web support (web AR) | Complexity |

Markers | Non-figurative image (marker) | |||

Images (image tracking) | Figurative Image | |||

Location-based | GPS Location | |||

Object tracking | Mesh / 3D Model | |||

Face tracking | Human face | |||

Body tracking | Human body, foot, hand | |||

World tracking | Plano horizontal o vertical | |||

Spatial tracking | Full-stay scanning (limited environment) | |||

World mapping | Scanning of large open spaces (world mapping) |

Other classification methods

It should be noted that this is not the only system of division of the types of augmented reality, where we give special weight to the massive use through cell phones and web AR. There are studies where a differentiation is made based on other types of concepts, such as this classification study of the different types of augmented reality 1.

Here, as can be seen, an organization is proposed in which supports are added such as the projection of images on buildings with tools such as large projectors or also where other types of tools such as glasses or headsets are included (example of Google Glass, HoloLens, etc.).

At Onirix, we prefer to target the classification as we have posited, since everyone has a very powerful and accessible AR device at their fingertips with which to visualize impactful, high-value experiences: their cell phone.

Types of mobile augmented reality

Below we will stop to review each of the types of augmented reality in detail and give more specific information about those types of AR supported by Onirix, or those we are working on.

Augmented reality on markers

Originally (although we still see some cases of the use of this type of markers), augmented reality was approached by detecting non-figurative images. That is, elements were posed that the human eye was not able to understand, but that were really good for an algorithm, since they defined very marked simple shapes and, therefore, it was easy to detect and track them.

These types of images evolved and even today you can place AR over QR codes, which are the same type of human-intelligible image, but at least they have the ability to appear around us in various types of physical formats.

This type of augmented reality has never really been used, beyond trials or experiments in computer vision departments, because of the limited usefulness of the final support.

In Onirix we support AR on QR codes, although it is a type of tracking that we only offer for specific cases that expressly need it.

Augmented reality on images (Image tracking)

The clear evolution of the previous case was to achieve an experience on figurative images, i.e. images that are in our daily life and are recognizable by the human eye: posters, product packages, business cards, labels, book covers, etc. In this case it is necessary to perform a small training process of the image so that the system detects the key points or recognizable points. From there, the vision algorithms are able to detect these images in a matter of milliseconds and then track them constantly, keeping the AR content as if it were anchored.

This is one of the main types of AR supported in Onirix. In this image tracking post you can find much more information about this extended type of augmented reality and its multitude of uses.

Location-based augmented reality (geo positioned AR)

When a GPS position on a map is used to launch AR experiences we can say that it is geolocated augmented reality or based on GPS positions. There are two main ways to display AR content in these cases:

- Content floating in real time connected to the GPS signal: through the camera we can see what is around us positioned according to its GPS position. This use is usually not ideal for one reason: the GPS error (consistently around 5-10 meters).

- Static content that appears when we reach a GPS position: this is a much more stable and easily consumable mode as it allows us to get the content we want once we reach a specific point. In Onirix we have a module to activate GPS maps in any of your projects.

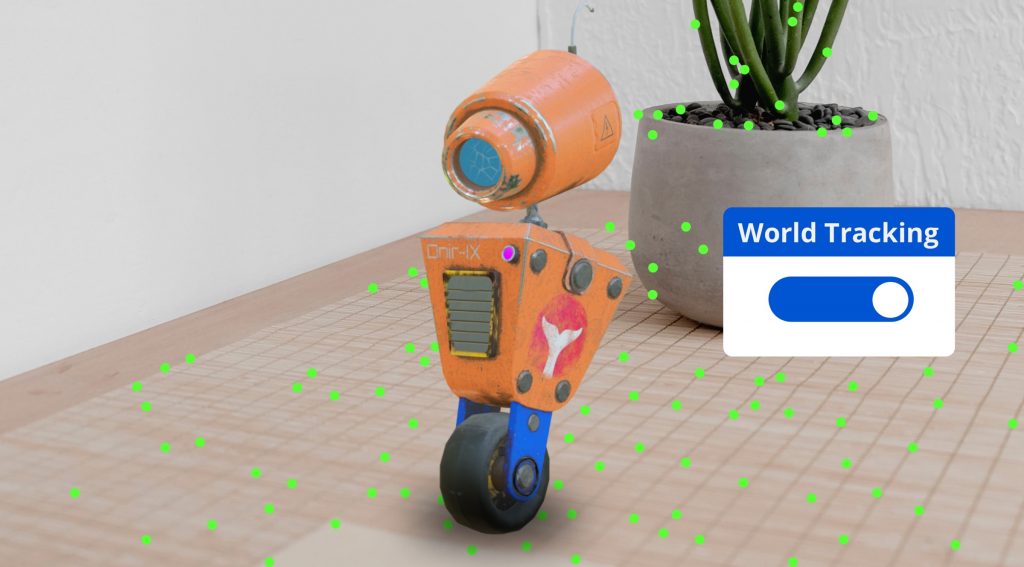

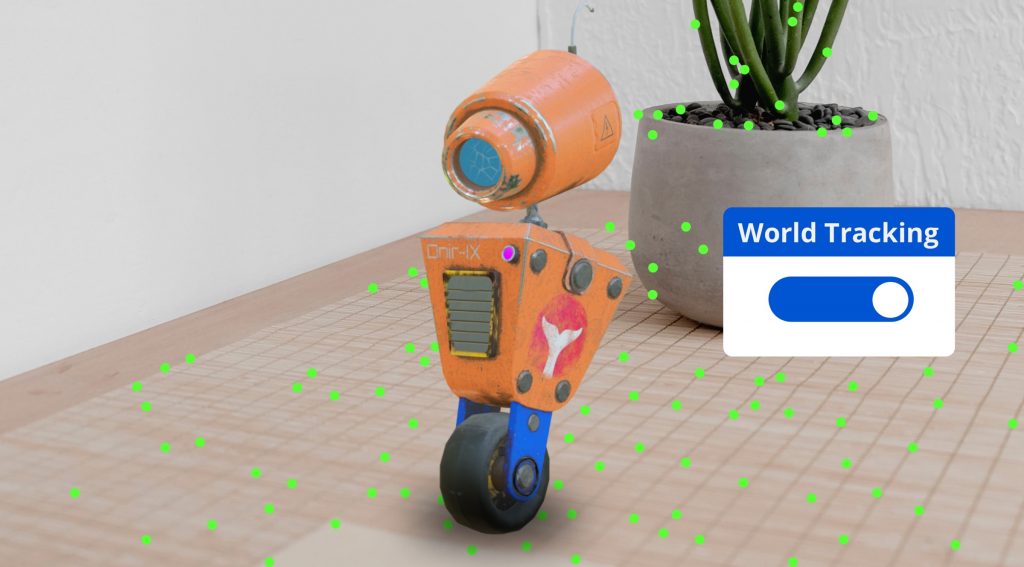

Augmented reality on surfaces (so called World tracking or SLAM)

This is undoubtedly one of the main ways of consuming AR today as it allows placing any kind of content freely in front of the user: on a table, on the floor, or even floating in the air. It is a really complex mode because it basically requires real-time detection and processing of the user’s environment. The big difference with respect to, for example, face tracking, is that the environment in front of the user is going to change in each execution and, therefore, the algorithm must be able to adapt to any scenario or surface: shapes, colors, textures, luminosity, etc.

To shape this kind of experience it is necessary to generate an algorithm that is able to take the key points of any environment, determine where they are located and also keep track of them throughout the execution of the experience. That is, it must be able to adapt to camera changes using sensors such as the gyroscope or accelerometer, in addition to all the vision processing through the camera.

That is why it is commonly called World tracking. It’s like a real world detector in real time that allows you to place content and anchor it to the surface.

At Onirix we have our own World Tracking system adapted for Android and iOS that you can test from our Studio platform. More information is available in our detailed documentation on surface type scenes.

Augmented reality for spaces (Spatial tracking)

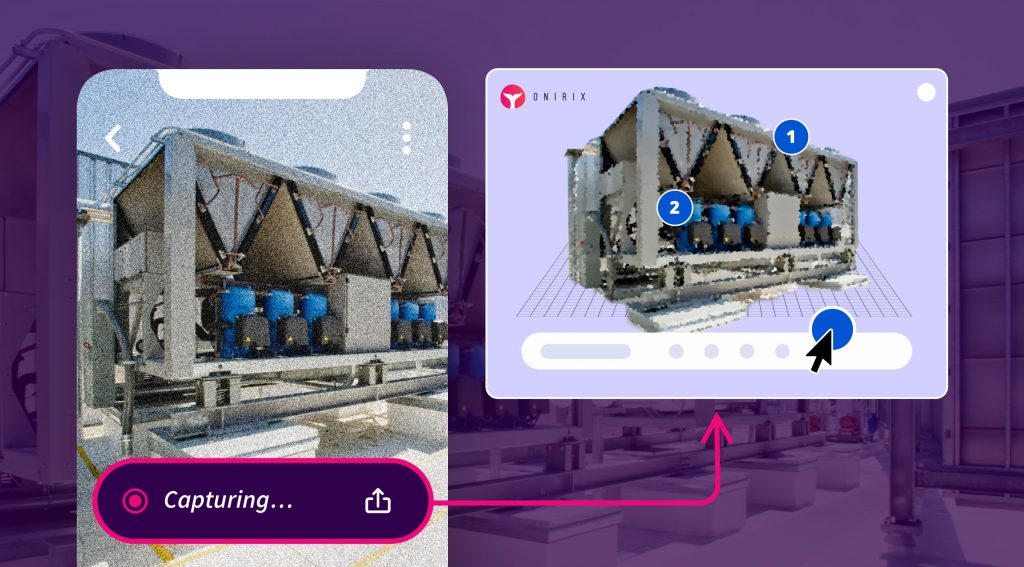

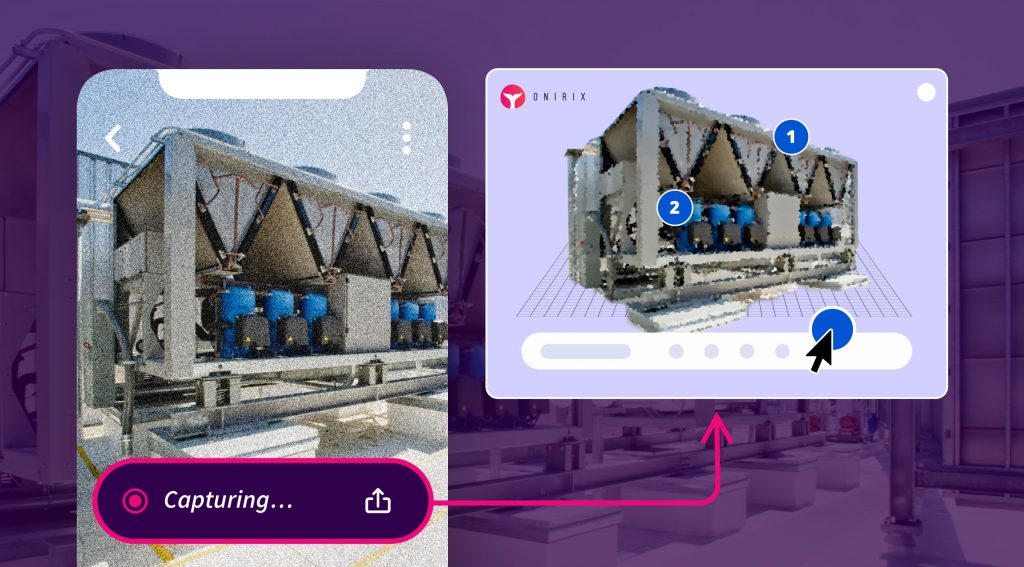

This is the next type of AR we are working to incorporate in Onirix, and support it in web AR. It is the combination of the two main trackings we already have: image tracking and world tracking. Thanks to augmented reality in spaces, the user can locate and detect an enclosed space, such as a complete room.

Imagine, for example, an engine room where an operator has to perform maintenance work. Having the ability to understand the entire space and track any of its areas makes it possible to offer very powerful and impactful experiences in any type of sector.

In order to configure the space where the experience is to be carried out, it is first necessary to carry out a scanning phase. This scanning is the taking of photographs of the whole environment during an extensive execution. Subsequently, the set of images is processed with the rest of the information obtained from the environment and what is called a mesh or a point cloud of the space is generated. This point cloud is a virtual representation of the room we want to work on.

Starting from this information we can configure the content in a simple way in a tool like Onirix Studio to finally be able to detect that environment through the phone and place all the anchored content in a precise way.

Augmented reality on objects (Object tracking)

This is a type of augmented reality not yet supported by Onirix but which we hope to add at some point especially with web AR support.

It is about generating a complete tracking on a real object and, therefore, being able to track any of its parts in a very accurate way. Normally this type of tracking is achieved starting from a 3D replica (at least the structure) of the real object. This 3D model (which does not need to have a realistic and textured finish, just the structure) represents the skeleton of the object and is used to perform the training phase and later detection of the object.

This type of AR is very useful to generate experiences in very complex elements that require precise indications and, above all, that are mobile elements, although it has the disadvantage that it requires the generation of that 3D model previously to be able to perform the training phase.

As we will see later, the AR type of spaces can also help in this type of experience, as long as they are not mobile elements.

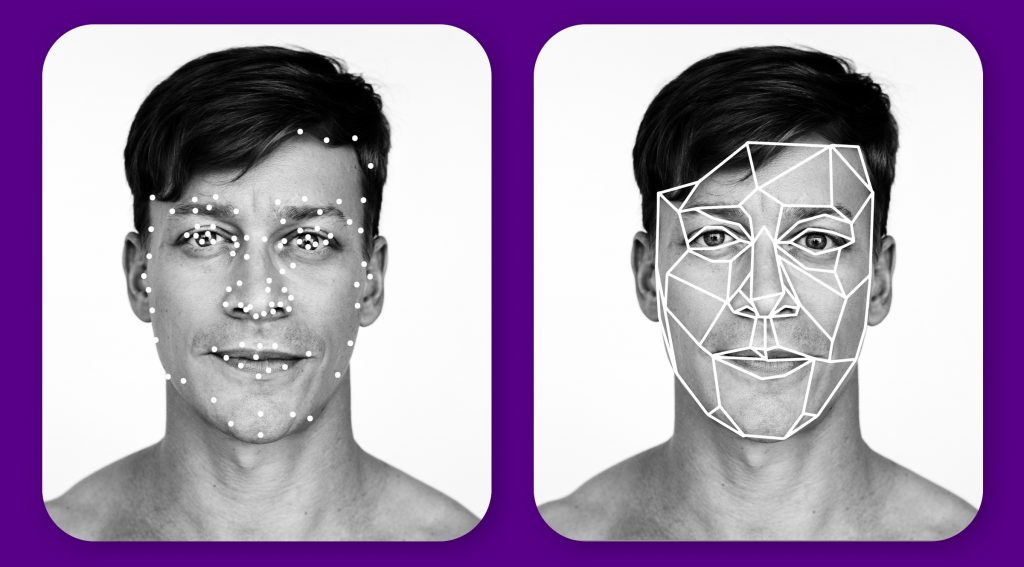

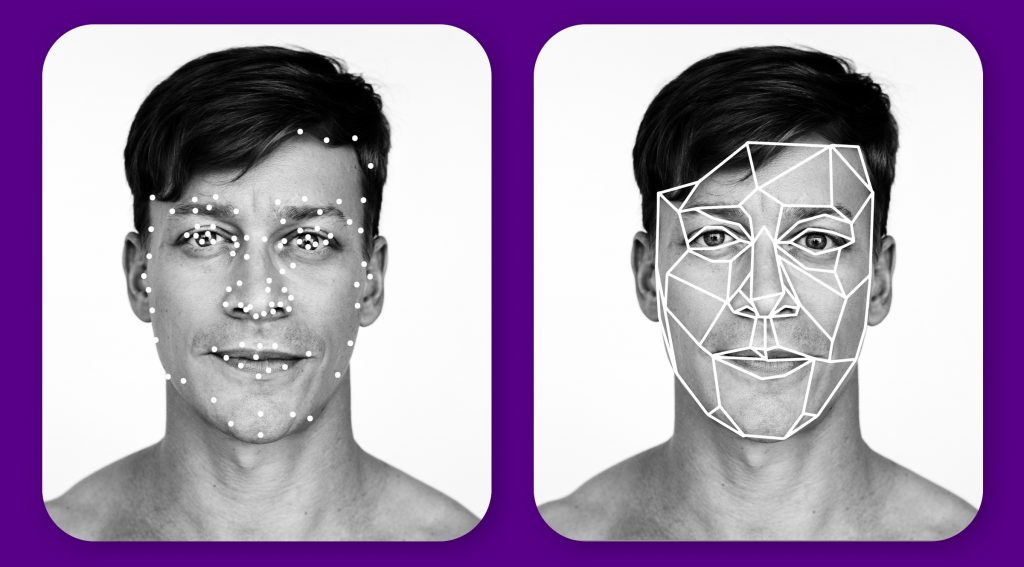

Face tracking or augmented reality with face filters

This is a very widespread type of augmented reality mainly for its use in social networks. We have all tried some face filter on Instagram or Snapchat in which our face is detected and visual information is displayed on it. It can be used to show us with makeup or directly with comic deformities.

These algorithms are based on the fact that people generally have the same facial structure. Therefore, it is relatively simple to generate an algorithm that is able to know that it is in front of a human face. For this purpose, algorithm trainers are generated with a database of faces of many people (datasets). From these datasets we are able to determine potential key points of the face that define the morphology (nose, mouth, eyes, eyebrows, etc.). With all this information, these algorithms are able to detect a face in milliseconds and accurately track and therefore place visual content effectively.

At Onirix we have focused on other types of tracking, mainly because there are already many solutions that solve this type of experience.

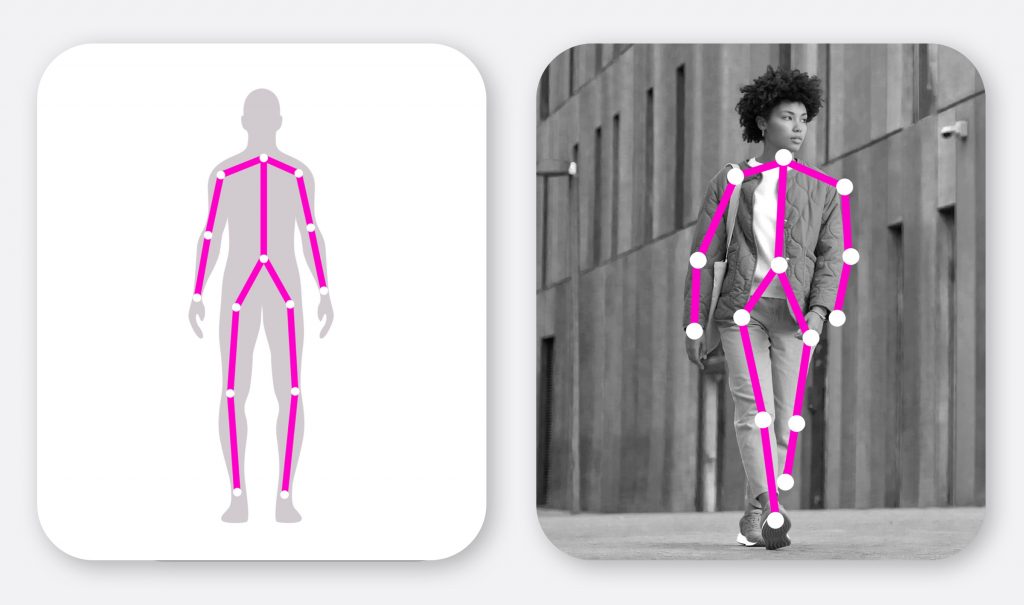

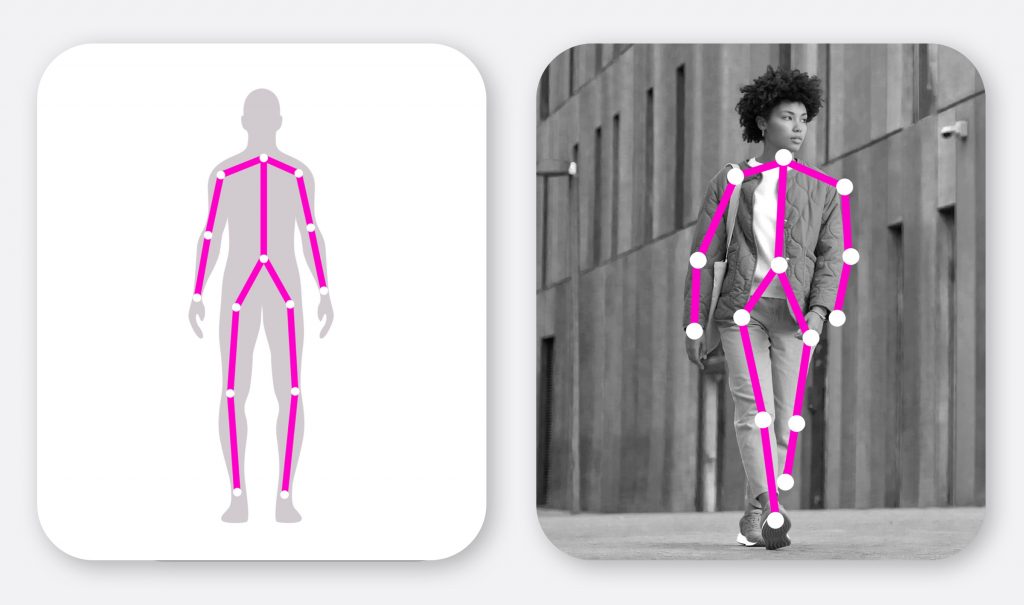

Body tracking or augmented reality on body parts

As in the previous case, if an algorithm is capable of detecting the morphology points of a face, it could also do so for the human body, a foot, a hand, etc. This is where the use of face tracking is extended to what is known as body tracking.

Once the algorithm is trained and is able to establish in real time the key points of the human morphology (or of a hand, or a foot), it would be possible to place overlapping content on those points. The challenge of this type of cases is to achieve a fluid algorithm in web AR since generally the existing cases are more device oriented and independent as is the case of the famous kinect.

Every day there are more and better algorithms that allow to detect this type of shapes and, therefore, it is a very wide field to experiment with brands and product ads.

Augmented reality in open spaces (World mapping)

This case is a similar use to the previous one, but where we start from a scan of much larger and wider environments. Thanks to technologies such as Google Street View, a company like Google is able to generate these scans of various parts of the world. With these scans we will be able to place augmented content in various ways. One of them will undoubtedly be through platforms such as Onirix, where we will be able to see a representation of these spaces and place the content through the scene editor in a simple and intuitive way.

This is precisely what Google has been using to provide AR content to its internal applications such as Google Maps, which in the near future will possibly be adapted for consumption from the web. Other companies such as Niantic or Snapchat are also carrying out this process, mapping parts of the world and processing them for internal use or publication in their SDKs.

At Onirix we do not yet have a World Mapping system, but it is in our plans to enable integrations with the most important tools that support this type of tracking in the near future.

Sources

- JASCHE, Florian, et al. Comparison of different types of augmented reality visualizations for instructions. En Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems. 2021. p. 1-13.

- PEDDIE, Jon; PEDDIE, Jon. Types of augmented reality. Augmented Reality: Where We Will All Live, 2017, p. 29-46.